High resolution audio, asynchronous USB,

oversampling, upsampling and stuff:

The current state of affairs

by Pedja Rogic

- Part 1: High-res digital audio

- Part 2: PC, USB, and hi-res audio

- Part 3: Oversampling and upsampling

- Part 4: Hi-res, non-oversampling

- Part 5: Surpassing the oversampling

- Part 6: PC opportunities

Part 1: Hi-res digital audio

These days we are probably in the middle of big changes happening in the audio world. Source, media, format, and even probably the most important, the way the music is treated, or commonly “used”, appears different than it did only several years ago. All these changes are almost entirely associated with personal computers and their technological derivatives, and the way they practically established as audio gear.

There are several reasons that pushed PC so strongly forward into this audio world. Firstly, a PC, with associated discs, now appears extremely convenient for music storage. Then, today’s fast internet became a major, and practically dominating communication channel, turning downloads and online streaming into seriously competitive forms of music purchasing and listening, and putting once again classic physical media aside. Finally, the latest technical development made it possible to include even a plain typical home PC into the highest quality audio chain.

Also, the year 2010 appears a breakthrough regarding the use of USB interface in so-called high resolution digital audio. This fact, as well as the apparent lack of any previously really adopted standard in this domain, also fastly turns PC into a de facto standard source for hi-res digital audio. This is also one of the reasons why this year was one of the most important in the process that made PC recognized as a regular audio source, and after which point a classic high end audio gear, the one that can not connect or communicate to PC, must even take a somewhat secondary role.

“High resolution” digital audio 1

This process is progressing actually quite rapidly and, judging by the current sales decrease rate, the CD format, which was a major standard for music for more than 20 years, in a couple of next years may practically disappear from the stores in its current physical shape.

Of course, this won’t necessarily move the so-called “CD format” to history, because the format itself can migrate from physical media to PC based systems. However, at this moment, music in classic CD format (44.1 kHz / 16 bits) can be still purchased mostly only in a classic way, as a physical CD, and only sometimes also by downloading. In that way, nothing still has changed about the way the CD is sold, however since internet speeds and local discs capacities are increasing, there is no reason for 44.1/16 not to move to the web stores. Actual media for local storage of downloaded music gets inexpensive, and hundreds of GBytes now can be already purchased for several tens of EUR, with all the convenience in use, as is the one of, say, removable USB hard discs.2

On the hi-res side, physical standard as DVD-A was never a real success, and 96 kHz – 192 kHz / 24 bits files are usually available only for download. At this moment this part of the market is made of dedicated consumers, so they are, it is understood, either ready to pay for adequate internet connection, or to accept longer download times.3

As a result, some of us from this side of the business have been lately faced with strong demands to offer up to 192/24 compatible audio devices for PC. The claims are, anything less than that is already “obsolete” within high quality audio scope. Indeed, the quantity of material available in hi-res formats is increasing, and some important record companies also accepted the hi-res challenge by reissuing their old recordings in hi-res digital formats, and offer them for download via major web stores.

Personally having no doubt about hi-res advantages, or about high end audio future being significantly associated to some kind of it, and proudly just signing myself to the world’s first non-oversampling 192 kHz / 24 bit USB D/A converter, a step into the real world of hi-res recordings at this moment can bring us different perspective though.

Firstly, since the music moved online, the mass market didn’t show a tendency to download the music even in CD quality, and up to now, it looks by significant part rather satisfied with 44.1/16 compressed into lossy mp3. A vast majority of today’s music downloads, which is the most increasing part of the music market, are almost entirely mp3, or other similar compressed formats downloads. This is certainly to a big extent caused by the relatively insufficient bandwidth of currently standard internet connections for convenient download of uncompressed files,4 but then again, one shouldn’t neglect the significant participation of consumers who are not that addicted to the actual audio qualities. Anyway, this is how things settled on, and how they work for now, and this may be the strongest reason why many music publishers are reluctant to invest further into quality, and, as a part of such an investment, to move to higher sampling rates and bit depths as standard ones.

And there is also another, at this moment maybe less discussed, but ultimately the same important issue, and it is about the recording side. The good sound is far from being all about nominal sampling frequency and the number of bits, both during recording and playback. On the recording side, it is about adequate acoustics, adequate microphone setup, good recording amplifiers, and mixing and other gear, and their use in the first place. There are, and there will be good and bad recordings, and there is no format that will change this.

One particular problem with “special” hi-resolution recordings comes into consideration though: many new “hi-res recordings”, just as many so-called “audiophile recordings”, suffer from superfluous attempts to barely impress, trying to aggressively convince the listeners into advantages of the new format.5 Such recordings, as “tweaked” as they are, have nothing to do with the actual quality that new formats actually bring. In addition, many of them are still done by applying brickwall antialiasing filters during the A/D process, which mostly negates hi-res advantages. With such a treatment, it is hard to understand and expect the real benefits of a hi-res format.

And this is a somewhat paradoxical situation: such issues that come from the recording side, so basically from people who should be the most interested in the audio quality, might be the most important to correct, to bring hi-res into more significant reality.6

Hopefully, things in this regard will improve soon. In the longer terms, it is important to draw the line: hi-resolution is not here to save suboptimal or bad recordings, or to bring tolerance for bad recording techniques – it is here to overcome the limitations of “low-res” CD format – no more, and no less.

Pages 3 and 4 of this article will particularly discuss these limitations in frequency / time domain, i.e. limitations associated with the sampling frequency.

The increase in resolution in the amplitude domain, i.e. bit depth, should be also taken into account, though the amplitude domain was never that controversial as time / frequency domain topics of the CD format. Basically, a problem with resolution in the amplitude domain is associated with the “quantization error”, and it gets more critical at low levels. Apparently, 16 bits depth appears sufficient for performance at full level, however at lower levels, where the number of available bits decreases, then things get much more critical. Thus, a THD of 16 bits format decreases from about 0.001% at full level to about 0.1% at -40 dBFS, and about 1% at -60dBFS. The content of these artifacts might not be sonically benign, as it turns some microdynamics into signal correlated distortion. Different dithering techniques were hence developed to randomize this correlated nature of these artifacts, hence especially improving on low level performance, however a higher bit depth can be normally welcome.

___________________

1 – We will limit ourselves here to the (linear) PCM audio and put the DSD / SACD approach aside, since it is about to disappear commercially, for some time at least.

2 – Please however note that this “media gets inexpensive” statement doesn’t equal or suggest anything like “music gets cheap”, and I will never support any similar statement or point of view. The music itself, and the work of musicians, deserve and require an adequate financial basis, or it is sentenced to disappear otherwise. Hence I sincerely hope that music production will find its way through all these changes.

3 – One hour of 192/24 material is about 2 GB in size. Up to 50% can be saved by using lossless compression methods though.

4 – With the compression ratio of 11:1, the most often used 128 kbit/s mp3 requires less than 60 MB to compress one typical CD (650 MB).

5 – Problems with natural reverberation appear most obvious to me, whether it is emphasized, or missing, to emphasize the instruments. Listeners should supposedly perceive either of these artificial effects as “improvement”. I remember practically the same approach was used more than two decades ago, to “convince” listeners how DDD “pedigreed” CDs are better than AAD ones.

6 – On the other hand, many standard new recordings are intentionally processed during re-mastering (post-production), to improve playback at cheap gear, or playback in a noisy environment. The recording industry developed many techniques for this purpose, some of them resulting in what’s recently known as the Loudness War. Supposedly, hi-resolution is intended to aficionados, so hi-res re-mastering should avoid using such techniques.

Part 2: PC, USB, and hi-res audio

Do the PC & USB really fit into high quality audio today?

Obviously, music can be easily stored in, or streamed via PC. Usually, any serious audio system that employs a PC as a source assumes the digital signal sent out of the PC box to the external D/A converter. This digital transfer between PC and D/A converter can be accomplished via some of the widely spread interfaces, USB being the most commonly used.

Back in 2008, I published this article on USB audio, which represented the state of things in those days. Back then, practical constraints were adaptive USB audio operation, and Audio Class 1.0 definition, which limits the audio bandwidth to 48 kHz (three channels maximum, for both playback and recording, or to 96 kHz one way).7

For anything above this frequency, custom software development was needed. Not only at the peripheral side of the USB interface, but the Windows driver was also missing, and Microsoft up to now in fact did not come with its own native Windows support for USB Audio Class 2.0 definition (once again: to be distinguished from USB 2.0, see footnote 1), even if they participated in proposing these definitions, back in 2006. Mac OS X included Audio Class 2.0 support practically since 10.5 days, though it officially did not support it before OS X 10.6.4. Linux USB Audio Class 2.0 support also did not exist before the summer of 2010.

Also, as said, all peripheral USB audio controllers available back then were working in so-called adaptive mode, operating as slave devices with respect to the host PC, and requiring a PLL to retrieve the sampling clock from the incoming stream. USB however can also work in so-called asynchronous USB mode, but such equipment also required custom coding, as no ready made IC has been able to perform such a task.

The asynchronous USB operation uses the bi-directionality of the USB interface to make a peripheral device (here it is the D/A converter) acting as a master device, and turning a PC into a slave that sends the data only when and as requested by the DAC. This way, overall clock performance at the DAC side is not constrained, or any other way limited by the PC or by otherwise necessary PLL. It is solely determined by the quality of free running oscillator, placed locally in the DAC.

Though the cooperation itself was actually arranged earlier, since April this year Audial actively worked with London based luxury audio brand Gramofone, to develop the world’s first asynchronous USB, up to 192 kHz / 24 bit compatible, non oversampling D/A converter. To introduce this device, several weeks ago I posted this information to the Audial blog area.

In addition, to really understand the way the audio playback can be accomplished uncompromisingly by using a PC via USB interface, the following issues should be considered:

1. Jitter performance. Asynchronous USB puts the DAC into the master mode, and a sampling clock is generated locally in the DAC, so it can be as good as it gets. Jitter depends solely on the quality of the local clock and its local distribution scheme, and it doesn’t depend on the source and interface, and associated problems. PLL gets definitely redundant.

2. Galvanic and HF isolation. This is virtually necessary for both top performance and simplicity of use, as it makes users free of headaches associated with PC-induced noise and possible ground loops. Practically, at the technical level, things can be executed uncompromisingly by using the digital isolators, regardless of their usually poor low-frequency jitter performance, because the master clock is located at the DAC side of this isolation.

3. Bandwidth. Now we have a USB Audio Class 2.0 compliant system. Please note that Class 2.0 definition makes it possible to transfer not only 192/24 stereo, but also multichannel audio, via the already widespread USB 2.0 interface.8

Talking further on practical issues, the Windows native USB audio driver at this moment still doesn’t support Audio Class 2.0 definitions, so an additional driver is required. Mac OS X practically works with Class 2.0 devices since version 10.5, however this is not officially supported, and there are no guarantees regarding stability. Mac OS X 10.6.4 is however officially supported and should work well.

Now, regarding technical solutions, a no-compromise asynchronous USB audio device requires two high quality local master oscillators, one for 44.1/88.2/176.4 kHz, and another one for 48/96/192 kHz sampling frequencies (with appropriate switching scheme, of course), and dedicated low noise supply is mandatory. Once you consider all this, apart from galvanic decoupling and all the everlasting requirements regarding high quality audio, such as audio circuitry itself, supply, passive components, layout, and alike, you will understand why this is not, and will not become a low price devices area.

But the good news is that we can claim another audio circle closed: the jitter performance of USB audio can be as good as crystal oscillators’ performance. And in one very good way, regarding the purity of the clocking scheme, with this we are basically again at the point we were with an integrated CD player (please see page 2 of this article) – but now able to use PC, and all the files stored there, 192 kHz ones included, instead of the “silver” disc (only).

___________________

7 – Back then, a USB 2.0 (“High Speed”) was already commonly accepted by hardware manufacturers, so please note that the USB 2.0, which is a general USB specification, from 2000, is different than USB Audio Class 2.0, which is a USB definition for audio devices, from 2006.

8 – USB 3.0, released in November 2008, is not necessary for this audio purpose. Up to ten 192/24 audio channels can be run both ways, over USB 2.0

Part 3: Oversampling and upsampling

High resolution recordings? Don’t we already have oversampling and upsampling to increase the resolution?

No, we do not, and this was one of the most abused misunderstandings in digital audio. Neither oversampling nor upsampling have anything to do with the resolution. So let’s go back to the basics.

Just a few years after its initial release, the digital audio almost unquestionably accepted to use the oversampling. Basically, the oversampling was supposed to provide a low pass filtering, and thus help reconstruct the original waveform, in the digital domain. This way, the use of only a slight analog post-filtering becomes sufficient, and all the heavy analog filtering hardware (many coils, or multiple opamp stages), with associated problems and expenses, became outmoded, in favor of one inexpensive oversampling IC.

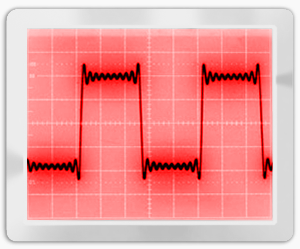

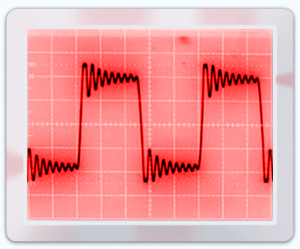

The basic supposed requirement for low pass filtering comes from the usual zero order hold function of DAC chips, and which produces artifacts above the audio band, so-called images. Zero-order hold denotes the waveform generation where the previous sample amplitude level is held until the next sample appears.9 Such a staircase waveform (the click will popup the graph showing the original waveform in blue, staircase waveform in red, and error signal in black), when observed in the frequency domain, comprises said artifacts: every carrier frequency is accompanied by the pair of images around sampling frequency, and around its multiples as well.10

To visualize the actual problem, here is the graph I posted back in 2003 to my personal site (not online anymore). It shows the spectral content of supposedly clean 1 kHz, 5 kHz, 10 kHz, and 20 kHz (sinewave) signals, sampled at 44.1 kHz sampling frequency. Obviously, 1 kHz produces the images at 43.1 kHz and 45.1 kHz, and then at 87.2 kHz and 89.2 kHz, and so on. So, the sampled frequency is mirrored around the sampling frequency, and its multiples.11

Fig. 1: Images of 1 kHz, 5 kHz, 10 kHz and 20 kHz sinewave, sampled at 44.1 kHz

There are two important points regarding the levels of these images. The first is, the higher the sampled signal frequency, the stronger the images. The images produced by the 10 kHz signal are stronger than the images of the 1 kHz signal. And the second is, the levels of these images are the highest around the first multiples of the sampling frequency, and they decrease around the higher multiples of the sampling frequency. Consequently, it is much easier to filter the fourth than the first set of images.

Now, if we apply oversampling, by interpolating existing samples, we get the new “samples” between the original ones. This way the staircase waveform becomes denser in the time domain (also in the amplitude domain but here we can put it aside). This way we do not produce any useful musical information that already wasn’t there, but this way we do remove some images. With 4x oversampling applied, only every fourth pair of images will remain, and all the others will be removed, exactly as if we were using a 4x higher sampling frequency. The most important is the fact we remove the most critical first sets of these images, which are, as we have seen, not only the closest to the audio band, but are also the highest in level – the first left image of 20kHz signal sits very close to the signal itself, at 24.1 kHz, and has about the same level as the signal itself. The oversampling thus gives much better headroom, i.e. notably wider transition band for analog (post)filtering, so much simpler analog post-filters can be used. And such a combination of digital oversampling, and analog post-filtering is the audio industry standard since the mid-80s.

So, it is important to understand that the oversampling, and upsampling for that matter, was never meant to improve the resolution, or anything like that. Claims like this are handy for marketing purposes, but they have nothing to do with reality. The only way to improve the resolution of the system is to increase its native sampling frequency (and bit depth, of course, referring to the amplitude domain), during the recording or mastering process. This is also an important point: over- and upsampling at one side, and high native sampling frequency at another one, are totally different animals. The sole purpose of over- and upsampling is to provide filtering in the digital domain, by interpolating existing samples, and thus forming a more favorable waveform shape, that is easier to post-filter. But such an oversampled waveform comprises no more useful musical information than the original waveform does.12

So, once you apply say 4x oversampling, only every fourth pair of images in the row displayed on the graphs above will remain, which is then easy to remove by small analog post-filtering.

___________________

9 – Other than zero order hold, there are also the first, second, etc. order holds, which include more sophisticated waveform generation from discrete samples, but which are practically never used in this audio domain.

10 – Another phenomenon associated with zero order hold is a typical frequency response curve, with roll-off at high frequencies within the passband, and which is a sin(x)/x function in the frequency domain, sometimes also called sinc roll-off, sinc envelope, or aperture distortion. Thus, an “uncompensated” (read: filterless) system with 44.1 kHz sampling frequency has -0.8 dB roll-off at 10 kHz, dropping down to -3.2 dB at 20 kHz. Brickwall filtering, which is required by Shannon-Nyquist criteria for reconstruction of bandlimited signals, also corrects for sinc roll-off, making frequency response within the passband perfectly flat. Normally, in this regard, it also does not matter if filtering is done in the digital (oversampling) or in the analog domain.

11 – Strictly speaking, this graph fully applies only to the “real multibit” D/A conversion and converters, commonly referred to also as “R2R” (again strictly technically speaking this is not correct, since there were only a few converters ever which were really made as R2R). Sigma/delta processors involve different stuff which influences their output noise spectra, apart from the modulator itself the most important being the noise shaper.

12 – Oversampling normally denotes the process of moving the sampling frequency to the frequency that is an integer of the original frequency, say from 44.1kHz to 176.4kHz (4x oversampling), and upsampling is the process where the resulting frequency is not an integer of the original frequency. One can actually find the term upsampling referring to different stuff, but during the last decade it established as a synonym for asynchronous sample rate up-conversion. So conversion from 44.1kHz to 192kHz or to 1.536 MHz would be an “upsampling”.

Oversampling is performed by adding new samples by interpolating the existing ones, whereas upsampling includes overall re-sampling, usually accomplished by two sub-processes: oversampling to the least common multiple of original and target frequency, and downsampling to the target frequency. Again, no process here obviously can bring any useful musical information – regardless of frequencies, algorithms, or any imaginable technique used.

Asynchronous sample rate conversion, whether it is up-conversion or down-conversion, as a process is basically required only in a mixing environment, where it makes it possible to accept and process any sampling frequency. Basically, the ASRC operation includes some buffering to store the data prior to the processing, and this buffering brings ASRC also certain ability to reject some of the incoming sampling jitter. This is also why it appears handy to some designers for playback only devices. However, the way it influences the jitter performance is also usually misunderstood, as this case is a go or no case: all the sampling jitter which is not removed prior to processing, is irrecoverably embedded into the output data.

Part 4: Hi-res, non-oversampling

Non-oversampling approach and sampling frequency

In the late nineties, and mostly as a result of Mr. Ryohei Kusunoki’s articles published in the MJ Magazine, audio people started realizing how digital filtering, and filtering in general, is not that necessary. The digital system appeared to work, and to sound very well at that, without filters. Well, why not, finally, provided that our electronics can cope with all those images, not distorting because of them, and our ears naturally low pass them at the end of the “chain”?

Something about our understanding of requirements in this domain was apparently wrong.

And, admittedly, for almost one decade, I am a non-oversampling guy.

So everyone will conclude that I don’t believe in digital filtering. This is correct. But many will also mistakenly assume that everyone who doesn’t believe in digital filtering, believes that the CD format is enough. This is not correct. Actually, a non-oversampling approach in digital playback requires a higher native sampling frequency.

Moreover, and as opposed to the basic Shannon-Nyquist theorem proposal, I consider the digital system able to relatively correctly convey (continuous) waveform, only if its sampling frequency was at least about ten times higher than the waveform frequency itself, because ten samples may guarantee relatively decently represented sinewave. Looking in the frequency domain, this would make us practically free of all those images, and with playback chain consequently free of any extreme filtering, required by the Shannon-Nyquist system. Which also leaves our setup transient response intact.

So, this might oppose to what common wisdom can perceive as “requirement”, and hence I will say explicitly: oversampling is intended for the “low res” formats, where it deals with their filtering requirements. Hi-res formats don’t need it. So, basically, with a native sampling frequency of about 200 kHz, are you done in all these aspects? Well yes, you are, and you can safely forget all the over- and up-sampling, and any imaginable sort of similar processing.

As a bottom line, let’s look at the benefits of higher sampling frequency with respect to the lower one:

1. It sets easy requirements regarding analog filtering – this is what oversampling and upsampling can do too.

2. It brings a higher resolution in the time domain – this is what an oversampling and upsampling can NOT do.

Part 5: Surpassing the oversampling

Can hi-res turn oversampling and upsampling into historical obsolescence?

In some way it would be good – natively higher sampling frequency indeed encompasses everything they ever did, and without their disadvantages – however it is not likely to happen soon.

But let’s look shortly at the problems with these digital filters.

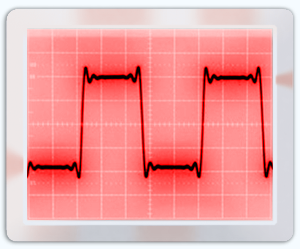

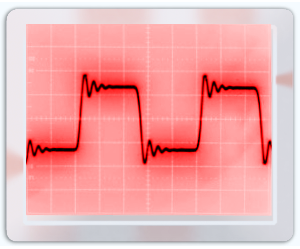

Firstly, just like all the filters in this world, they also have their own frequency and transient response, the one in the frequency domain being associated with the one in the time domain. So, the more radical the cut-off, the more ringing at the impulse signals as well. Of course, it is still possible to argue that the transient response of today’s still ubiquitous brickwall finite impulse response filters (FIR, a.k.a. non-recursive or linear phase filters), even if it is intuitively wrong for its pre- and post-ringing13, is actually mathematically correct. Such a response actually represents the band limited signal itself, with phase left intact (“linear”) within the passband. Nothing more and nothing less, and in that way it would be, err, perfect.

Fig. 2: Brickwall FIR filter transient response

(1 kHz squarewave measured)

The next graph, which is a mathematical presentation of 1 kHz square wave, with harmonics up to 20 kHz included, and those above 20 kHz omitted, and which nicely matches the previous graph, might support such a point of view.14

Fig. 3: “Perfect” FIR filter transient response

(1 kHz squarewave, mathematical presentation)

However, this intuitively still a bit bizarre looking response corresponds pretty well to some subjective qualities, traditionally associated with the transient response of the system. Namely, all the brickwall FIR filtered digital systems I’ve heard up to date, had big problems conveying natural decay and space, and timbre. In addition, they are prone to boost intense reverb and echoing, false “details”, which is rather only an imitation of the desirable system’s ability to convey unrestrained resolution, which should appear within the overall coherent artists’ performance. And there was no brickwall filtered digital system that was able to convey the natural sound stage, with precise positioning, and natural instruments’ dimensions. Instead of such a natural space, they boost an artificial reverberation of undefined spaciousness, which often swamps the instruments themselves.15

There are several reasons behind this, and we won’t go into detail for now. They can be both associated with the hardware solutions, and with the algorithms used – so it is worth noting that the FIR filters with practically identical nominal responses still can sound quite different, significantly better and worse.

Also, FIR filters do not necessarily always employ such a steep cut-off, and sometimes more soft response is applied as a compromise that lowers the ringing instead. Such and similar solutions were often recognized for their more appealing subjective performance, however the basic sonic properties of FIR filters remain.

Fig. 4: Soft FIR filter transient response

(1 kHz squarewave measured)

So far so good. But one can still sacrifice linear phase response and thus get rid of pre-ringing, and make, by digital means, a filter with response equivalent to the filters known from the analog world. And indeed, such “minimum phase” filters (usually identified as “recursive” or IIR – “infinite impulse response” filters), sound to my ears more natural than linear phase filters do.

Fig. 5: Brickwall IIR (minimum phase) filter transient response

(1 kHz squarewave measured)

Again, a less steep response can be applied, and it will lower its post-ringing. But then again, a minimum phase filter, for its phase response, which is not linear, is not mathematically correct anymore either.

Fig. 6: Soft IIR (minimum phase) filter transient response

(1 kHz squarewave measured)

Anyhow, it is only a higher actual sampling frequency that solves all these concerns, and brings us everything that’s ultimately needed, and without significant filtering: clean transient response, with neither pre- nor post-ringing, and with an intrinsically flat frequency response up to 20 kHz.

However, currently standard 44.1 kHz / 16 bits format shell remain to exist and dominate the mass market for quite some time, and all the digital processing conventionally associated with its playback in conventional devices, such as oversampling, will remain a standard as well. Yet, as opposed to it, in the high end audio world, where oversampling is a questionable technique, things may be different. The use of hi-resolution material, and without any processing of this kind may fit well one of the purposes of high end world: to be a non compromise, avantgarde oriented place.

As a notice, please take into account that one somewhat different kind of oversampling will remain associated with the D/A conversion: it is involved in the delta/sigma modulation, that turns multibit PCM into low bit output, making an actual D/A conversion less critical and less expensive. Cheaper delta/sigma D/A converters are 1-bit, whereas more ambitious devices employ 5- or 6-bits output. In fact, delta/sigma is how the vast majority of currently produced audio D/A converter ICs operate, and there are only a few remaining classic multibit exceptions. Delta/sigma modulation, which shifts actual bit depth and sampling frequency into a fewer number of bits and higher sampling frequency, is not necessarily associated with the low pass filtering though. Hence, the things explained above about low pass filtering do not necessarily apply there.

___________________

13 – Apart from the mathematical presentation of 1 kHz square wave, all the filter responses shown here are the waveforms I measured at the output of the Wolfson WM8741 chip fed by 1 kHz squarewave. WM8741 offers a nice choice of all these filter types.

14 – In mathematics, this is known as the Gibbs phenomenon, which describes such behavior of Fourier series, around jump discontinuity. In turn, it points out how the actual waveform can be difficult to approximate around its discontinuities (read: transients), by using a finite number of sinewaves (read: brickwall filtered system).

15 – There is an interesting proposal of applying brickwall bandlimiting to the good old vinyl record playback system, as a demonstration of its detrimental sonic effects.

Part 6: PC opportunities

Regarding PCs, there are two points to consider, and only one is associated with the previously discussed hi-res file playback possibility.

In addition, on the lower res, CD format side, a PC connectible DAC that is also 192 kHz / 24 bit compatible, and which doesn’t perform oversampling, can be the way for those wanting both oversampling and non-oversampling approaches under their own control. So, should you consider a clean path a preferred one, you can simply send 44.1 kHz / 16 bits file directly to the DAC. Should you consider over- or up-sampling 44.1 kHz / 16 bits file any advantageous, you can perform this by PC software, and the DAC will accept it processed up to 192 kHz and 24 bits. At this moment, most of the audio population probably never had a chance to actually and directly listen to the effects of this kind of processing and to actually get their own conclusion on the benefits or shortcomings of oversampling. Such a device is a chance to satisfy this curiosity as well. In addition, with adequate software, different filter types can be checked easily.

The things being said, the software able to perform processing of this kind in the real time is scarce at this moment. To use the possibilities that PC is now really opening, the user would be at this moment mostly directed to “offline” processing by sound editing software. Still, there is no reason not to expect improvements, and more software of this kind to appear in the near future, whether as stand alone utility, or in the form of updates or plug-ins for existing software players.16

And, the things do not necessarily stop at playback itself: PC, now successfully integrated into the highest performance audio system, may come in handy for all the other kinds of processing that may be useful in the audio, as is the room acoustics equalization in the digital domain, or it can be used as a digital speaker crossover, because USB, provided that it supports class 2.0 definitions, can operate as a multichannel audio interface, etc.

—–

In many ways it is beyond doubt: the audio has moved to PC and associated storage media, as well as to the internet streaming, and the upcoming audio future will be determined by this fact. And we were the first to come up with a real reference, yet easy to use D/A converter in the PC area, which is an asynchronous USB, non oversampling, up to 192 kHz / 24-bit compatible device. On the other side, CD sales show a rapidly decreasing tendency, and it will probably disappear from the stores as physical media soon, probably in less than a couple of years. There are however many existing CD collections around. And even though some owners of such collections have been migrating to PC, ripping their CDs to hard drives, many owners of valuable CD collections won’t part of them that easily. Finally, classic CD references as Philips CDM0/1/2 / TDA1541A can achieve stunning performance, and, for the time being, there will be also considerable demand for playback devices of this kind. Audial won’t neglect this demand, either, and will also come with some kind of final reference device for CD media soon.

___________________

16 – Foobar2000 in fact already has an interesting set of DSP utilities that perform in the real-time, including the one that sets the output sampling rate frequency. What I’m after is fully customizable processing though.